library(rtemis)2 rtemis in 60 seconds

2.1 Load rtemis

2.2 Regression

For regression, the outcome must be continuous

x <- rnormmat(500, 50, seed = 2019)

w <- rnorm(50)

y <- x %*% w + rnorm(500)

dat <- data.frame(x, y)

res <- resample(dat)06-30-24 10:56:52 Input contains more than one columns; will stratify on last [resample]

.:Resampling Parameters

n.resamples: 10

resampler: strat.sub

stratify.var: y

train.p: 0.75

strat.n.bins: 4

06-30-24 10:56:52 Created 10 stratified subsamples [resample]

dat.train <- dat[res$Subsample_1, ]

dat.test <- dat[-res$Subsample_1, ]2.2.1 Check Data

check_data(x) x: A data.table with 500 rows and 50 columns

Data types

* 50 numeric features

* 0 integer features

* 0 factors

* 0 character features

* 0 date features

Issues

* 0 constant features

* 0 duplicate cases

* 0 missing values

Recommendations

* Everything looks good

2.2.2 Single Model

mod <- s_GLM(dat.train, dat.test)06-30-24 10:56:52 Hello, egenn [s_GLM]

.:Regression Input Summary

Training features: 374 x 50

Training outcome: 374 x 1

Testing features: 126 x 50

Testing outcome: 126 x 1

06-30-24 10:56:52 Training GLM... [s_GLM]

.:GLM Regression Training Summary

MSE = 1.02 (97.81%)

RMSE = 1.01 (85.18%)

MAE = 0.81 (84.62%)

r = 0.99 (p = 1.3e-310)

R sq = 0.98

.:GLM Regression Testing Summary

MSE = 0.98 (97.85%)

RMSE = 0.99 (85.35%)

MAE = 0.76 (85.57%)

r = 0.99 (p = 2.7e-105)

R sq = 0.98

06-30-24 10:56:52 Completed in 5e-04 minutes (Real: 0.03; User: 0.02; System: 3e-03) [s_GLM]

2.2.3 Crossvalidated Model

mod <- train_cv(dat, mod = "glm")06-30-24 10:56:52 Hello, egenn [train_cv]

.:Regression Input Summary

Training features: 500 x 50

Training outcome: 500 x 1

06-30-24 10:56:52 Training Ranger Random Forest on 10 stratified subsamples... [train_cv]

06-30-24 10:56:52 Outer resampling plan set to sequential [resLearn]

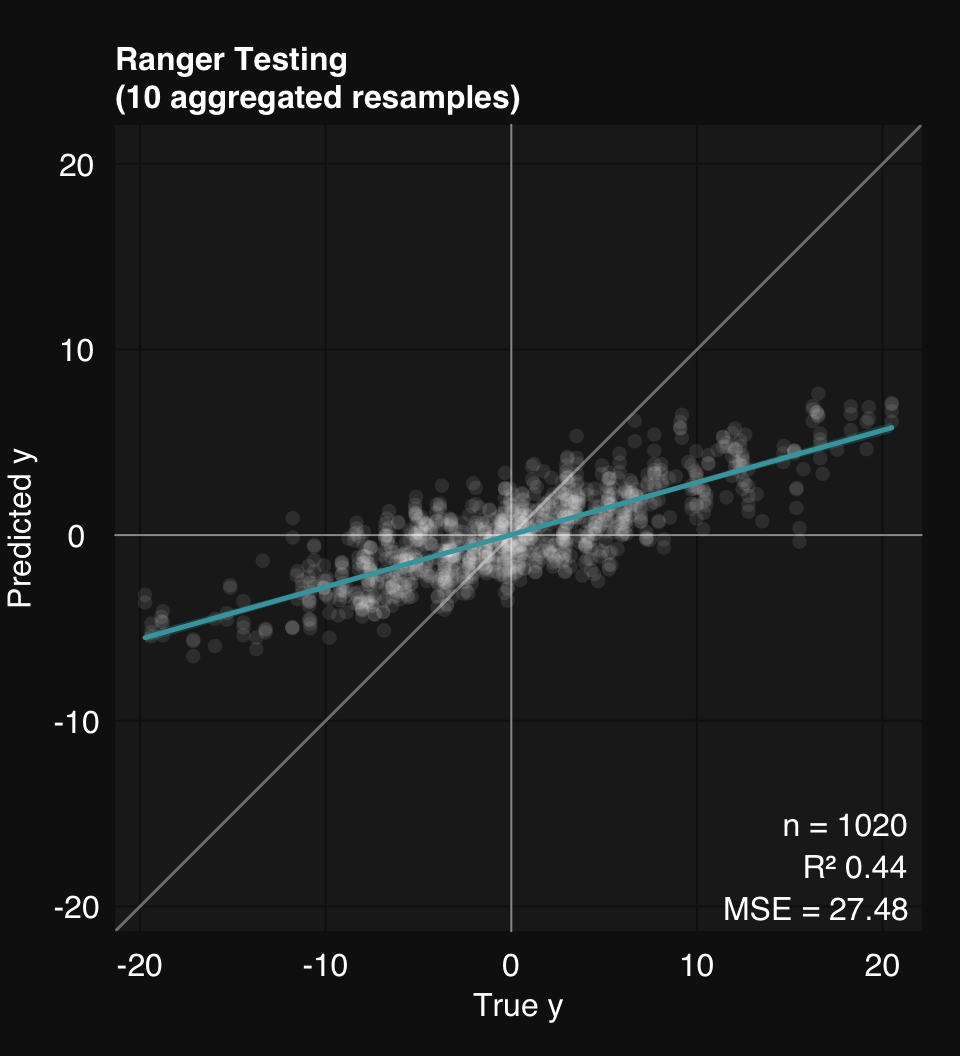

.:Cross-validated Ranger

Mean MSE of 10 stratified subsamples: 27.48

Mean MSE reduction: 44.11%

06-30-24 10:56:55 Completed in 0.04 minutes (Real: 2.59; User: 12.95; System: 0.32) [train_cv]

Use the describe function to get a summary in (plain) English:

mod$describe()Regression was performed using Ranger Random Forest. Model generalizability was assessed using 10 stratified subsamples. The mean R-squared across all testing set resamples was 0.44.mod$plot()

2.3 Classification

For classification the outcome must be a factor. In the case of binary classification, the first level should be the “positive” class.

2.3.1 Check Data

data(Sonar, package = 'mlbench')

check_data(Sonar) Sonar: A data.table with 208 rows and 61 columns

Data types

* 60 numeric features

* 0 integer features

* 1 factor, which is not ordered

* 0 character features

* 0 date features

Issues

* 0 constant features

* 0 duplicate cases

* 0 missing values

Recommendations

* Everything looks good

res <- resample(Sonar)06-30-24 10:56:55 Input contains more than one columns; will stratify on last [resample]

.:Resampling Parameters

n.resamples: 10

resampler: strat.sub

stratify.var: y

train.p: 0.75

strat.n.bins: 4

06-30-24 10:56:55 Using max n bins possible = 2 [strat.sub]

06-30-24 10:56:55 Created 10 stratified subsamples [resample]

sonar.train <- Sonar[res$Subsample_1, ]

sonar.test <- Sonar[-res$Subsample_1, ]2.3.2 Single model

mod <- s_Ranger(sonar.train, sonar.test)06-30-24 10:56:55 Hello, egenn [s_Ranger]

06-30-24 10:56:55 Imbalanced classes: using Inverse Frequency Weighting [prepare_data]

.:Classification Input Summary

Training features: 155 x 60

Training outcome: 155 x 1

Testing features: 53 x 60

Testing outcome: 53 x 1

.:Parameters

n.trees: 1000

mtry: NULL

06-30-24 10:56:55 Training Random Forest (ranger) Classification with 1000 trees... [s_Ranger]

.:Ranger Classification Training Summary

Reference

Estimated M R

M 83 0

R 0 72

Overall

Sensitivity 1.0000

Specificity 1.0000

Balanced Accuracy 1.0000

PPV 1.0000

NPV 1.0000

F1 1.0000

Accuracy 1.0000

AUC 1.0000

Brier Score 0.0176

Positive Class: M

.:Ranger Classification Testing Summary

Reference

Estimated M R

M 25 11

R 3 14

Overall

Sensitivity 0.8929

Specificity 0.5600

Balanced Accuracy 0.7264

PPV 0.6944

NPV 0.8235

F1 0.7812

Accuracy 0.7358

AUC 0.8643

Brier Score 0.1652

Positive Class: M

06-30-24 10:56:55 Completed in 1.8e-03 minutes (Real: 0.11; User: 0.19; System: 0.02) [s_Ranger]

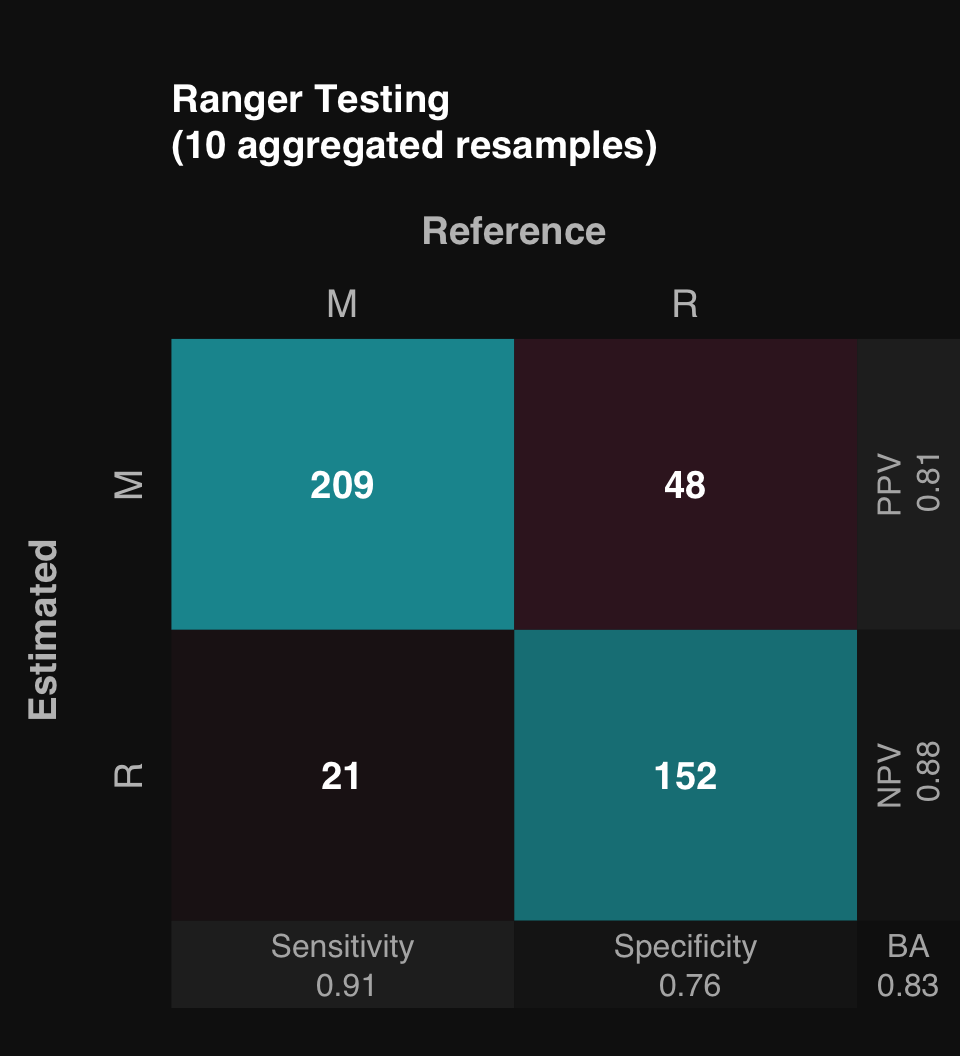

2.3.3 Crossvalidated Model

mod <- train_cv(Sonar)06-30-24 10:56:55 Hello, egenn [train_cv]

.:Classification Input Summary

Training features: 208 x 60

Training outcome: 208 x 1

06-30-24 10:56:55 Training Ranger Random Forest on 10 stratified subsamples... [train_cv]

06-30-24 10:56:55 Outer resampling plan set to sequential [resLearn]

.:Cross-validated Ranger

Mean Balanced Accuracy of 10 stratified subsamples: 0.83

06-30-24 10:56:56 Completed in 0.01 minutes (Real: 0.78; User: 1.86; System: 0.30) [train_cv]

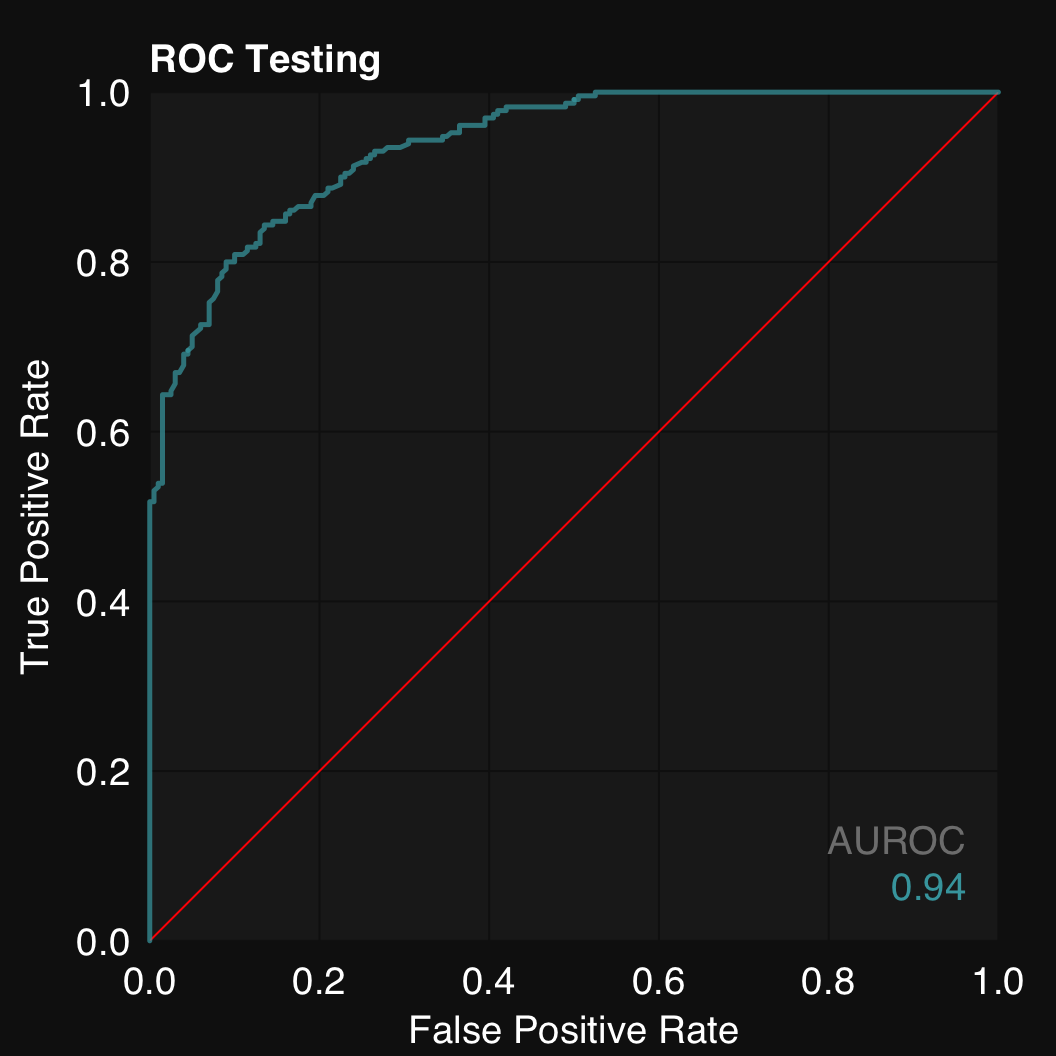

mod$describe()Classification was performed using Ranger Random Forest. Model generalizability was assessed using 10 stratified subsamples. The mean Balanced Accuracy across all testing set resamples was 0.83.mod$plot()

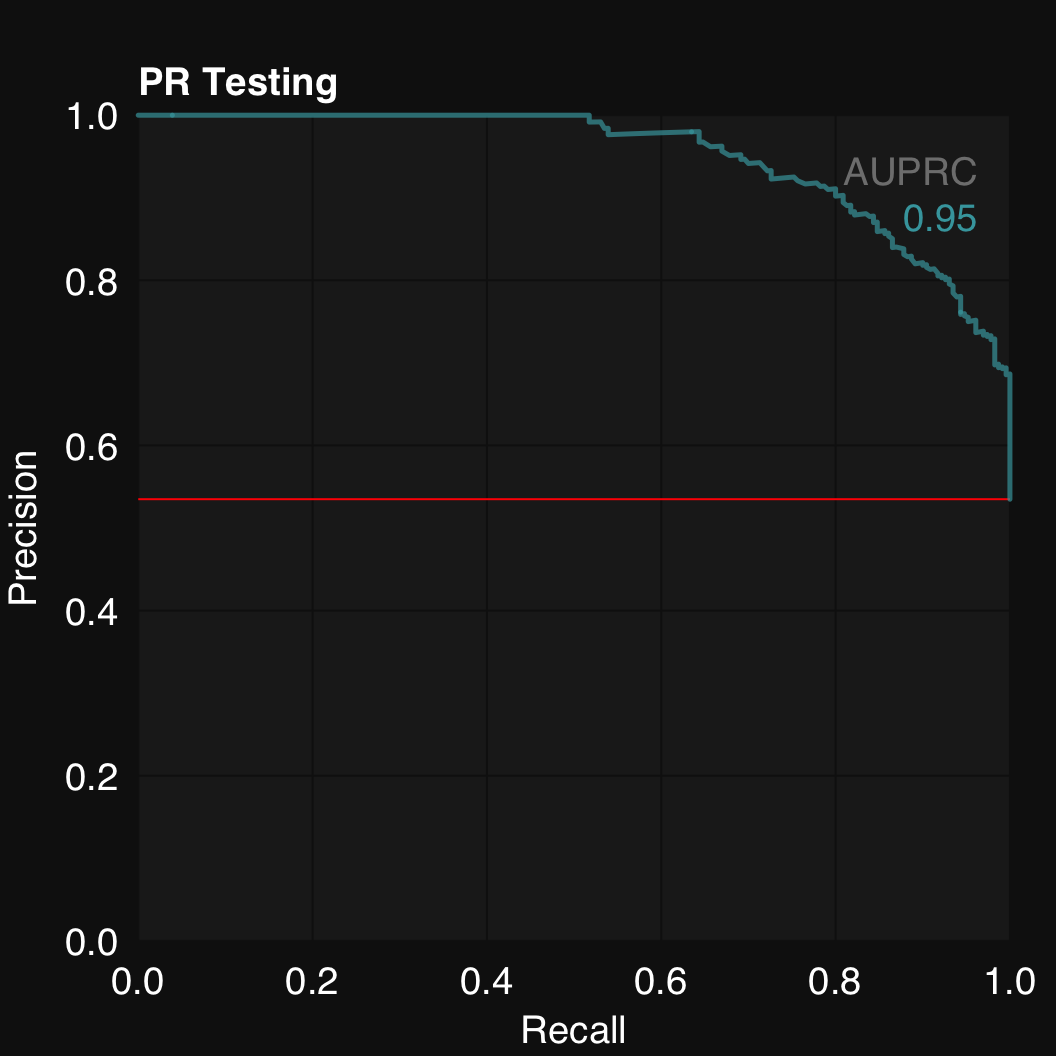

mod$plotROC()

mod$plotPR()